Over the past three years, the adoption of AI technology in healthcare has rapidly advanced, aiming to enhance patient outcomes and reduce the cost of care delivery. The healthcare industry has seen value from a clinical perspective, leveraging AI to aid in early disease detection, AI diagnostics related to image analysis, remote patient monitoring solutions, and accelerating the development of new therapies. While the use of AI in healthcare is still in its early stages, it is poised to become an integral part of every aspect of care delivery.

In theory, these advancements will enable everyone to live longer, healthier lives. However, in the short term, AI is a real threat to increasing health inequity. How is this possible?

Understanding bias in AI models

Often, disparities can be found in how models are designed, trained, labeled, deployed, and maintained. If the models do not properly account for assumptions based on real-world usage, the model will provide incorrect outcomes. In a healthcare setting, this has profound consequences, including incorrect or missed diagnoses, ineffective treatments, and longer recovery times. Poorly designed, trained, or maintained AI models can exacerbate health inequities and widen the health equity gap.

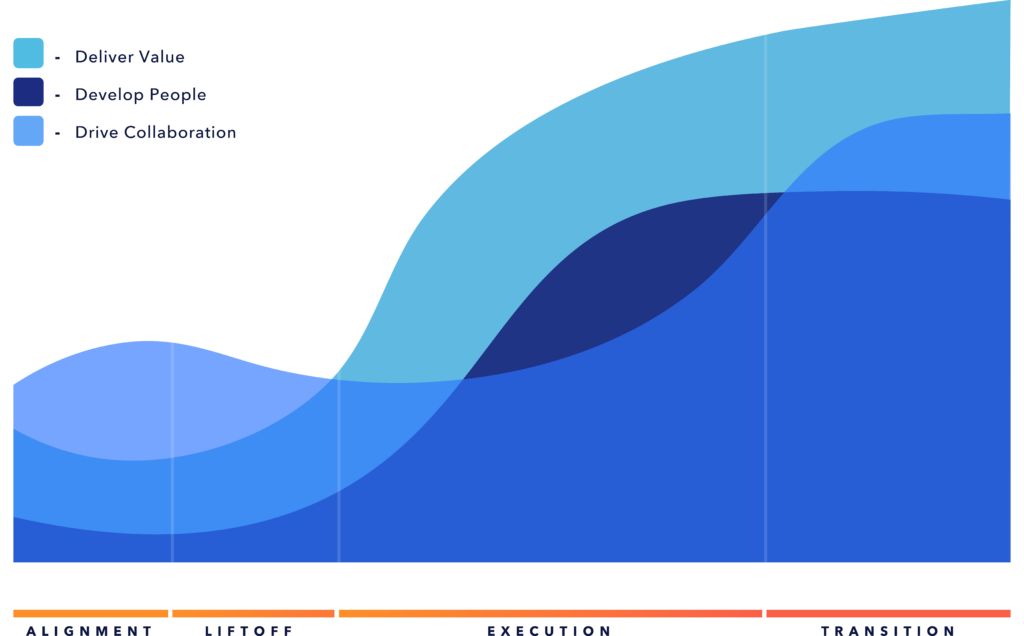

Let’s dive into an example based on the Value-Based Care paradigm. Value-Based Care (VBC) emphasizes improving health outcomes while reducing costs. Effective metrics and monitoring are critical to ensuring success in VBC models, making this area ripe for AI technology.

One of the key factors of the VBC model is Utilization metrics related to telehealth services for managing chronic disease. An AI model may be designed to predict the effectiveness of telehealth in managing chronic conditions such as diabetes. Leveraging AI to predict telehealth usage can help healthcare providers optimize resources, improve patient access, and enhance the quality of care. By analyzing patterns in patient behavior, demographics, and historical data, AI can offer actionable insights to forecast demand and tailor telehealth services effectively.

The model’s design is a critical first step in identifying and addressing any inherent bias. To avoid injecting bias into the model, the AI algorithms must consider the social determinants of health related to the patient cohort. For example, some patients may not have proper connectivity, technical aptitude, time availability, or the proper environment for a telehealth visit. If the model fails to incorporate these factors or assumptions or adds weight to socio-economic factors, it will demonstrate bias toward vulnerable patients.

Challenges with training data in clinical models

Beyond operations, properly designed models are even more critical in a clinical setting. As new, highly tailored clinical AI models are developed for specific modalities, the models are only as good as the data used for training. If the training data doesn’t represent diverse populations (e.g., race, gender, age, or socioeconomic status), the model may perform poorly for underrepresented groups.

A common example that is often used is referencing skin cancer detection models trained predominantly on lighter skin tones. The model will fail to detect certain skin conditions in darker skin tones. Another example is when applying the concept of bias to breast cancer research. Failing to train models on image data from diverse populations, accounting for differences in age, ethnicity, breast density, and geographic regions, can result in models that will not deliver early detection or improved outcomes for a wider range of patients.

Addressing historical bias in AI

Clinical models also need to observe and address historical bias, which may come in the form of legacy data that served as the basis for the model when it originated. Most health AI models are developed at large research hospitals and often include clinical data from diverse patient cohorts. However, they tend to be biased toward patients from higher socioeconomic backgrounds, primarily those with health insurance and the resources to participate in clinical studies.

Historical healthcare data often underrepresents marginalized groups, such as racial and ethnic minorities, women, and low-income populations. This can be due to available healthcare funding, job availability, mobility, and access to services, including imaging, labs, and pharmacy services. The limited availability of data during the creation of the initial dataset will hinder clinical effectiveness, and this challenge may persist even as the model is subsequently trained on a more diverse dataset.

The impact of bias in AI healthcare models

The most apparent consequence of bias is reduced clinical efficacy and limited utility. If healthcare AI models fail to deliver improved patient outcomes, their value and relevance diminish significantly. Poorly designed models can lead to diagnostic errors, delayed treatments, or inappropriate interventions for certain populations. Furthermore, the models could perpetuate systemic inequity and be perceived as unreliable. As clinicians lose confidence, adopting AI technology in the clinical setting will become increasingly challenging and may impact the overall speed of adoption.

4 ways to improve the utility and equity of healthcare models

Several ways can improve healthcare AI models, including Policy and Regulation, Stakeholder Engagement, Thoughtful Design Practices, and formalized MLOps capability.

- Governance: Beginning with model governance, policies, and regulations can be developed to ensure that clinical model design and development include evidence-based reporting on diversity in training datasets and model performance across demographics before deployment. Formalized actions will help ensure that diversity in the data is considered, modeled, and tested to avoid any known bias.

- Stakeholder engagement & advocacy: An intentional approach to stakeholder engagement is also critical to address potential bias in model development. Through purposeful collaboration with diverse community representatives, healthcare providers, and researchers, the developers can ensure equitable design and deployment of AI models. With the input of assumptions and perspectives from a representative community, the dataset will be more robust and will provide more clinical utility from the start.

- Intentional design: Thoughtful design that leverages Health Equity Research and SDOH perspectives can provide insights critical to model design. By incorporating data and assumptions that specifically aim to improve care for underserved communities, health equity research will provide details that explore disparities in care delivery for disadvantaged patients and provide data points that can be leveraged to augment the models.

- Observability: MLOps enables continuous monitoring of deployed models and serves as a valuable approach for identifying and addressing model bias. Best practices involve establishing an MLOps framework to actively oversee model performance, detect potential biases, and implement mechanisms for retraining and redeploying models as needed. This proactive approach is essential to maintaining the clinical effectiveness of models throughout their lifecycle in real-world applications.

As we progress with the AI journey in healthcare, building AI models that support the entire population and actively leverage AI to close the equity gap will be critical. Representative clinical data capture, availability, and usage will allow us to drive toward improved health and longevity for the total population.