Challenges in implementing machine learning (ML) projects

One of the reasons Machine Learning (ML) projects do not yield the expected financial or operational results is that companies frequently need a better understanding of where it makes sense to implement ML and why. Most companies are mired in a competitive frenzy in their race to be AI-ready, while some are adopting a more ambitious vision to be AI-first. Much like the pioneering days of electricity in the early 1900s, companies are convinced of the potential for AI. They are venturing forth using a trial-and-error method to understand where and how to implement ML to drive transformative changes. The beachfront of such ML projects in organizations is laid waste by countless examples of unfinished projects or undelivered promises, and leaders are rightfully scampering to find ways to increase the return on investment (ROI) of ML projects.

Measuring machine learning project success: improving decision quality and impact

The success criterion of any ML project is measured by whether the computer-trained model can generate predictions to markedly improve the quality of impacted decisions compared to how those decisions are made today. Keeping this success criterion in mind, leaders should fight the impulse to say, “Let’s implement ML and see what insights we can generate,” and instead adopt right-to-left thinking by starting with the end result in mind. In the context of ML, this means starting by asking which business decision I want to improve and what is the subsequent impact on company finances (e.g., revenue, cost of business, margin, etc.), operations (e.g., efficiency, speed of delivery, time to market, etc.), and performance (e.g., customer satisfaction, employee sentiment, etc.) from that improvement.

Five critical questions in the ML feasibility framework

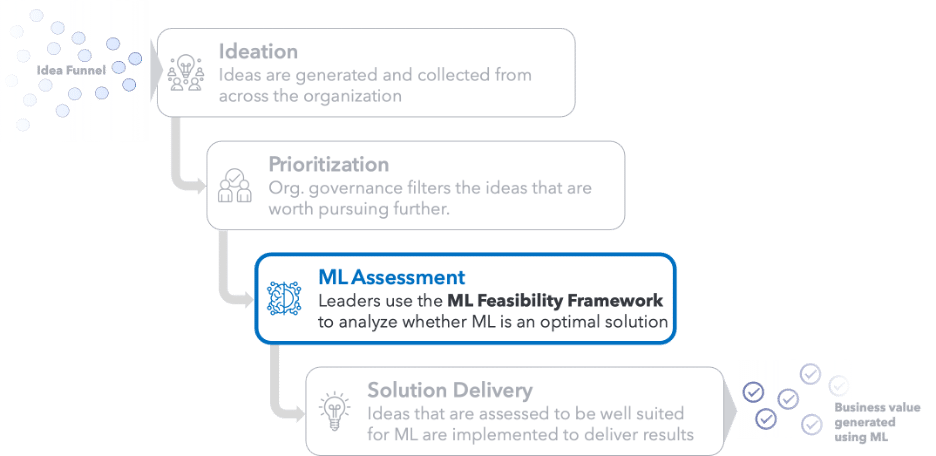

What is needed is an ML feasibility framework to gauge its applicability to a business problem. This way, leaders have more confidence about why and where to invest in ML projects, reducing the risk of non-delivery and non-adoption and increasing ROI.

The figure below illustrates most companies’ typical solution ideation to value realization methodology, augmented with the ML feasibility framework to drive higher ROI. Once ideas from the company’s idea funnel are vetted and approved, leaders should use the framework to work through the associated five questions and make informed decisions about whether ML should be applied to achieve the intended results before launching into the Solution Delivery phase of the methodology.

Question 1: Do I need to make this business decision at scale now or in the future?

Leaders need to ask whether using ML to generate predictions to increase the volume, speed, or simplicity of making a particular decision will positively impact company financials, operations, or performance.

The cost and complexity of running a modern business enterprise are increasing. Macroeconomic conditions, such as increasing labor costs coupled with ever-growing customer demand for quality and speed, mean companies must make decisions in larger volumes and quicker in a cost-effective manner.

For example, Amazon’s Prime 2-day delivery model forces other retailers to make inventory decisions for more products more quickly to stay competitive. To assess the applicability of ML, leaders need to ask whether using it to generate predictions to increase the volume, speed, or simplicity of making a particular decision will positively impact company financials, operations, or performance.

If the answer to this question is yes, the benefits of improving the efficiency and quality of the decision made at scale are generally multiplicative. If the result of making the decision at scale, either now or in the future, is not clearly understood, applying ML to automate the decision may not necessarily generate the expected return on investment.

Question 2: What is the impact of ML generating inaccurate predictions?

All predictions, including those made with ML models, have, by definition, a certain degree of inaccuracy. Although model accuracy can be systematically improved over time, leaders must pay particular attention to the impact on company financials, operations, and performance precipitated by inaccurate or insufficient predictions generated using ML.

Leaders need to understand the unique impacts of false positives (when a model predicts that a given condition exists when it does not) and false negatives (when a model predicts that a given condition does not exist when it does) to company financials, operations, and performance.

Leaders must understand the unique impacts of false positive (when a model predicts that a given condition exists when it does not) and false negative (when a model predicts that a given condition does not exist when it does) errors because the impacts may vary significantly.

In manufacturing, false positives for a quality assurance assessment could be perceived as an important exercise in due diligence, while in cancer screening, the same could subject individuals to costly additional screenings, unneeded treatments, emotional turmoil, and anguish. In either case, false negatives could lead to monetary or, worse, human loss.

Consequently, when deciding whether ML is the right solution to automate a particular decision, leaders must understand and be comfortable with the acceptable margin of error and resultant impacts. If the margin of error is deemed too high and/or the error’s impact is too consequential to company financials, operations, or customer outcomes, as compared to deciding without ML, then ML may not be an appropriate solution.

Question 3: What are the upstream and downstream impacts?

Often, companies implement ML models as a point solution to optimize a component of a larger system. This makes sense in situations where it can optimize a largely manual function loosely coupled with other parts of the enterprise.

Leaders need to consider whether the upstream and downstream impacts of optimizing a point solution are disruptive to the overall value chain or enterprise.

However, such an optimization may have unanticipated adverse cascading effects elsewhere in the value chain. Leaders need to assess whether increasing the throughput of decision-making, powered by ML-generated predictions, will cause unintended disruption, or additional cost, to other parts of the organization that may need more time to be ready for it.

For example, in the case where an ML solution that improves retail inventory management efficiency, if upstream suppliers are unable to adequately increase production to meet faster order rates, or downstream warehouse teams are unable to intake the just-in-time volume of inventory without significantly increasing staff, then automating inventory management decisions may simply create new bottlenecks or at least shift the existing bottleneck to another part of the supply chain.

Constraints and rate limits throughout the value chain should be examined to fully contextualize the total effect of the ML solution. Is the upside of the desired change greater than the downside of the induced disruptions?

To answer this question, leaders need to consider whether the upstream and downstream impacts of optimizing a point solution are disruptive to the overall enterprise before deciding whether to use ML to automate a particular business decision.

Leaders need to work with business stakeholders to reframe the business decision that needs to be automated as a prediction problem and decompose it to understand what predictions need to be generated to improve the quality, speed, and quality of the decision.

Question 4: What is the impact on the organization’s goals and individual users?

Companies often fall into the trap of committing to organization-wide transformation without paying sufficient attention to understanding how such changes will impact individual end-users, inside and outside of the organization.

Unfortunately, it is all too common that these broad strategic goals create efficiencies while disrupting individual consumers who are left wondering, ‘What’s in it for me?’

Leaders need to define success criteria for ML projects to include broad organizational key performance indicators as well as for individual stakeholders groups that will be impacted.

An example is an ML model trained to improve an error-prone decision that is either not seamlessly integrated into the existing toolset or is so opaque that end users do not trust or understand how the model generates predictions. The well-documented ‘explain-ability problem’ of ML may undermine end-user accountability and impinge too much on the end user’s autonomy and intuition derived from experience.

Leaders who drive effective ML initiatives engage with stakeholders at all parts of the value chain—including operations staff that must rely on the derived insights and the end-users, whether business to business or direct to consumer—early and often to realize the value of the insights produced and overcome the “last mile problem.”

Lack of adoption or usage is one of the primary reasons ML projects fail to deliver results, which means that leaders must define success criteria for ML projects to include broad organizational key performance indicators that encompass adoption and tangible benefits across all affected stakeholders.

Question 5: What underlying question needs to be answered through ML predictions to improve decision quality?

Suppose the answers to the previous four questions indicate that ML is the right solution to improve the quality and speed of making a particular business decision. In that case, the final exercise leaders must go through is reframing the decision as a prediction problem.

Although it is relatively straightforward to recognize that a business decision needs to be improved, it is often more challenging to decompose that decision down to what predictions need to be generated to improve the quality, speed, and accuracy of the business decision. Leaders need to understand that machine learning improves the accuracy and consistency of predictions in a targeted manner and is not a catch-all solution to vaguely defined problems.

For example, in a medical context, whether to perform a risky operation for a given condition might be recognized as a candidate for improvement, but what is the actual prediction that must be made? Is it the likelihood of a condition worsening? The likelihood of survival? What is the likelihood that a previously unrecognized correlated factor is present or will develop?

Decisions often boil down to one or more non-obvious elements that must be predicted accurately to make the best choice possible. Leaders who achieve this recognition can better direct their organizations toward the most appropriate and highest-yield candidates for ML solutions.

Refocusing decisions as prediction problems

The questions in this ML feasibility framework are not technical. Leaders need to collaborate with business stakeholders to discuss these questions to assess ML applicability. It is all too common for companies to bring in business stakeholders after an idea has been ideated, reviewed, approved, and experimented on using ML.

Implementing this framework may slow down time-to-market for some ideas as leaders and business stakeholders ponder the questions. However, what is lost in terms of time-to-market is a tradeoff to achieve two other critical outcomes.

First, collaborating with business stakeholders before project initiation to experiment and learn using ML helps to garner change advocacy and drive downstream adoption. Business stakeholders are informed of the art-of-the-possible and the constraints of using ML. They can better support ML projects by providing the technical delivery team with the necessary business and data context.

Second, leaders can be more thoughtful and strategic with ML investments by focusing on value-generation to business. Leaders can help to clean up the proverbial beachfront of failed ML projects by applying the ML feasibility framework and leveraging ML as a precision tool to solve problems rather than a hammer looking for nails.