When sharing the implications of generative AI on a recent podcast, Jeff Bezos used the analogy of Galileo and the telescope to make a compelling point that generative AI is not like other technological breakthroughs in that it is a discovery rather than an invention. The telescope itself was an invention, but seeing that Jupiter had four moons was a discovery.

Generative AI is more like the discovery of something fundamental.

Bezos also used the analogy of an airliner to support his point. A 787 airliner is a very complicated piece of technology. It has schematics; it is designed and tested to be entirely predictable, which is essential when flying people around the globe. It is a highly engineered object. We designed it, we know how it behaves, we don’t want surprises.

With generative AI, we are constantly getting surprised by its capabilities.

When you ask people working at the forefront of generative AI, they will invariably state they don’t know where it is going or what its implications will be in the medium to longer future (3 to 5 years). In another podcast, Ezra Klein said that when interviewing these people, many thought of themselves as sorcerers conjuring spirits out of a smoky portal. When that spirit walks out, it may cure cancer or destroy the world. This technology is hard to predict, particularly in the longer term.

Making sense of generative AI

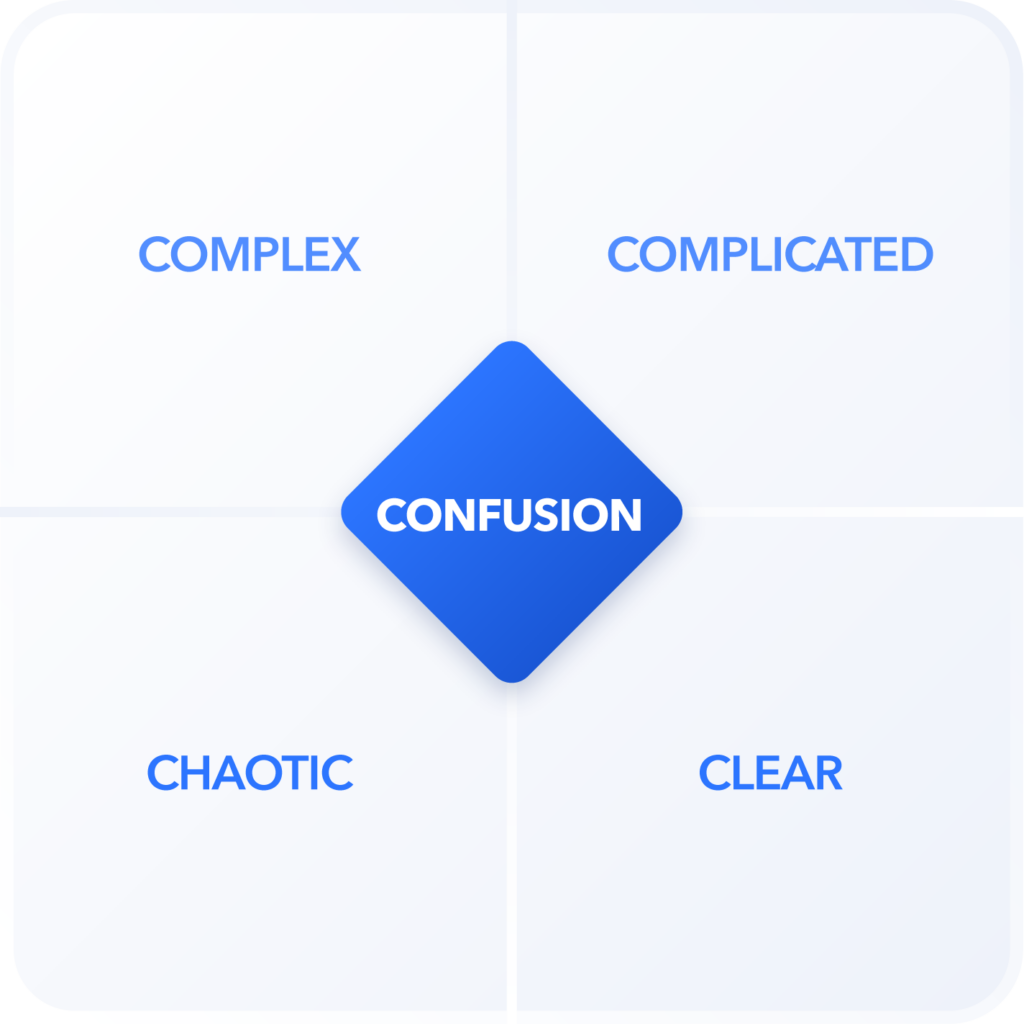

At Pariveda, we put a large focus on applying frameworks to how we understand, define a strategy, and deliver value for our clients. One of those frameworks we use extensively, developed by David A. Snowden in 1999, is called Cynefin (pronounced Kuh-NEV-in). It breaks up decision-making into five contexts: clear, complicated, complex, chaotic, and confusion. Depending on the nature of the problem you are trying to solve, the framework provides guidance around tools that are relevant to what context that problem falls in. How can we use Cynefin to help better understand generative AI? Can the framework offer tools we could deploy to help define a better strategy around generative AI?

What are the five domains of the Cynefin framework?

One of the Cynfin Framework’s strengths is that it provides guidance on managing problems that align with different domain states. It provides a series of actions to hopefully understand and manage the problem or situation one encounters. Let’s consider each domain to better understand Cynefin and how it can be applied to generative AI.

Clear

This is representative of predictable and easy-to-understand problems that have defined solutions. For example, to keep your car running, you must fill it with gas. If you don’t, you end up on the side of the road. It is a very predictable and deterministic outcome. For the clear domain, the guidance is to sense, categorize, and respond. Using our same example, if your gas tank is almost empty and you plan on driving to a ski slope two hours away, you need to put gas in the car. You sense the car is low on gas through the fuel gauge. You categorize this and identify a well-defined action based on the sensor’s output: “Fill the car with gas.” This problem has been solved before with a well-defined best practice to address the situation. You then respond by pulling over to a gas station and filling up.

Generative AI, in many contexts, is not this predictable. You can ask the same question to a large language model (LLM) and get different results. In some of the proof of concepts (POCs) we have been building, system demos to clients can be exciting because they may provide a completely different non-deterministic response than we were expecting. We have spent much time and effort making these responses more predictable. Given all these points, the clear domain does not seem very applicable to further understand generative AI.

Complicated

This domain represents something more like the 787 aircraft liner. It is complicated but engineered based on very tight tolerances to be predictable and very deterministic. As mentioned earlier, you don’t want a nondeterministic aircraft. It should do exactly what you tell it to do every time.

For the complicated domain, one should sense, analyze, and respond. Imagine a pilot has a warning light on their left aileron for the 787 before taking off. Once the pilot senses an issue due to the warning light, they call maintenance to analyze the problem and determine what is causing the light to come on. The maintenance team responds by identifying the issue and fixing it before taking off. This is considered “good practice.” The pilot follows a defined rule book that instructs them not to take off if one has any warning lights. One may create better ways to analyze and respond to warning lights over time, which may allow passengers not to sit on the tarmac as long. However, this is an iterative optimization to achieve a “better practice.”

Given Jeff Bezos’s point that generative AI is not an engineered piece of tech that has been more discovered than built, the complicated domain is irrelevant when applying the Cynefin framework to generative AI.

Chaotic

Cause and effect are unclear in the chaotic domain. Events are too confusing to wait for a knowledge-based response. An example was the beginning of the pandemic when health providers knew people were getting sick from an infectious disease but really didn’t know what was causing it or how it was transmitted.

For behavior in the chaotic domain, one should act, sense, and respond. At the beginning of the pandemic, countries made the very difficult call to act by implementing country-wide lockdowns, then sense to see if it is helping and determining if it is transmissible through the air, then respond with mandating strategies like masks that had the potential to save lives. This was a novel problem that did not have an easy rule book to follow, but one still had to act, then sense if that action helped, then respond with a first iteration of an approach that could be rolled out widely.

In the case of generative AI, given that the change in this technology is happening so quickly, it may apply to generative AI, particularly in the mid to long term.

Complex

This represents a domain where behavior is emergent and consists of unknown unknowns. You can’t take the system apart and analyze it like you could something that was engineered like the jetliner example. Per Wikipedia, the complex domain represents “systems that are impervious to a reductionist approach.”

In the complex domain, one should probe, sense, and respond. The pandemic moved more into this domain over time where health providers tried to pull in data to understand the disease better through probing with treatment and prevention strategies (that really were not supported by hard data at the time), then sense through analyzing the data to see if there was an effect then respond by rolling out broader populations once the probes and senses showed there was a positive correlation with transmission and outcomes.

In relating to generative AI, many people, including Jeff Bezos in his interview, feel the behavior of generative AI LLMs is emergent. So, this domain may be more applicable to understanding generative AI, particularly in the near term.

Confusion

When there is confusion, there is no clarity about what domains apply. One should break the problem apart and understand what pieces fit into what other domains. The goal, if achievable, is to move to a more predictable domain, like complicated or clear over time, as you understand the situation and it becomes more predictable. Many leaders likely feel a certain level of confusion regarding developing a strategy for generative AI as they try to understand where this technology is going.

I believe that generative AI falls between the chaotic and complex domains depending on the nature of the specific problem space one is trying to decipher.

Navigating the chaotic emergence of generative ai

When the first LLMs initially came out (GPT 2), we didn’t understand their power, and one could argue that we were in the chaotic phase. Novel behavior came out of these models that surprised even the creators. Many organizations followed the chaotic game plan to first act by potentially shutting down access to these models from their corporate intranet. Some of the reasons you might act would be the potential for legal liability, risks of leaking sensitive corporate data, or potential of the risk of non-deterministic behavior or hallucinations to do harm.

Some other recommendations that were put forth were to pass legislation that would put all development of LLMs on hold for six months, providing time to sense and respond. This was not a wrong response. But it is truly shooting in the dark, and we can have a more deliberate approach as we get a better handle on the technology.

The chaotic interpretation is also applicable in the longer term for generative AI, given the spirits coming out of the clouded portal are unpredictable over the longer term. Having a 5- or 10-year strategy for this technology may not be terribly relevant given the foundations are changing underneath the technology almost monthly. To quote Bezos, “Define your strategy around things that are not going to change.” With many aspects of generative AI, that is quite difficult. For this context, you must define actions you would want to take before you really know where it goes.

Four ways to probe in your organization

Based on my assessment, the most applicable domain for generative AI in the near term falls into the complex category. As mentioned above, this is where Cynefin would say one should probe, sense, and respond. To follow the guidance, what appropriate probes can an organization execute? Here are a few that we’ve performed at Pariveda:

Educate and inform your workforce

Embark on a mission to educate your workforce and raise awareness around generative AI. At Pariveda, we ran a generative AI summit where we pulled people from multiple disciplines to understand and provide better explanations of how the technology works. We recorded those sessions and made them available to the rest of the company. Consider who in your organization could work together to organize a similar session and encourage learning opportunities.

Build proof of concepts

Proof of concepts help organizations understand the technology, determine where it is most applicable and what business problems it can potentially solve, and get a sense of how difficult or easy it is to work with. At Pariveda, we’ve invested in and created POCs covering both vertical and horizontal applications to find both industry relevance and understand what common architectures were most applicable. To support my own journey around understanding the technology, I bought a 4090 RTX video card and built a new system to run models locally. This allowed me to see the relative strengths and weaknesses of different models I could download from Hugging Face and allowed me to start to experiment with prompt engineering and begin to learn some of the supporting frameworks. Begin with identifying potential experiments that can guide your generative AI strategy.

Engage partners

Connect with partners who are further along in this journey, who are not only actively developing this technology with a product-centric approach but are also ready to share their insights on how it can be tailored and implemented in your organization. These partners can offer guidance on the technology’s challenges and strengths, helping you discern its most effective applications and when traditional AI/ML might be a better fit. This collaboration lets you pinpoint your needs, allowing for targeted solutions where your organization can truly make an impact. For example, our deep data pipeline experience gave us a leg up in helping customers bring the right data to the model to support both training and RAG (Retrieval Augmented Generation) applications.

Engage clients

Understanding the core technology from partners is very helpful. But generally, partners have thought about this technology from a horizontal perspective. It makes sense as they are building the core technology for a wide variety of use cases. However, it’s important to recognize that each industry has unique challenges and requirements. This is where your expertise in specific sectors becomes invaluable. By identifying the gaps that you, as a leader, perceive in the core technology, opportunities arise to tailor solutions that address these needs from an industry-specific standpoint.

In the healthcare sector, for instance, this industry-specific approach has led to innovative ways of helping patients navigate complex insurance regulations to secure coverage for necessary procedures. Leveraging a deep understanding of the underlying data and business rules can make technology more applicable and beneficial to your specific context.

Furthermore, learning from your own experiences and concerns is crucial. For example, the decision to use public data in initial proof-of-concept projects has been well-received, allowing your organizations to explore the technology’s potential without worrying about exposing sensitive or proprietary information. This collaborative learning process mitigates risks and enriches the technology’s development with real-world insights.

All these probes allow us to get a better sense of the utility of the technology and where it can best be applied. It is starting to provide a roadmap of where we can respond and make more significant investments with some supporting information rather than the chaotic act of shooting in the dark. We see that this framework is providing a strategic direction to start getting a handle on this transformational technology, much like we all began to understand and control the pandemic. Our probes will help us understand when that may happen and when we have to act first again.

As with COVID, a new virus will always be on the horizon. Leveraging this framework in the context of generative AI, we can be in a better position to manage the change we are sure is coming. Still, we can’t be complacent and not continue to probe, sense, and respond to the change coming, and also not be afraid to lean back into the chaotic and act if we see something new on the horizon that “comes out of the smoking portal” we don’t have a strategy for.