Just one year ago, organizations found themselves at a crossroads. Many rushed headlong into the AI revolution, scrambling to uncover high-impact AI solutions, crafting persuasive business cases, and securing funding to harness what many saw as the next great competitive advantage. Others waited on the sidelines, hesitant to dive in, unsure of the risks, and uncertain about how AI would truly reshape their industries.

Fast forward to today, and the landscape has shifted dramatically. As 2025 gets underway, the urgency around AI has never been greater. What was once an exploratory phase has now become a full-fledged race to implement AI at scale. Companies are rapidly developing use cases, launching pilot projects, and making significant investments in AI-driven innovation.

Yet, despite all the excitement, enthusiasm, and capital being poured into these initiatives, many AI projects are hitting roadblocks, struggling to get off the ground.

Does this sound familiar?

- “We completed a POC and proved the value, but we can’t get it into production.”

- “Our AI initiative was moving fast until security and privacy concerns put everything on hold.”

- “We keep hearing about responsible AI, but we don’t have a clear way to define or measure it.”

- “Our leadership is pressuring IT about AI, but we don’t have a clear path to execution.”

There’s no shortage of AI ideas, yet companies aren’t moving at the pace they want. What’s going on? Let’s examine a few perspectives:

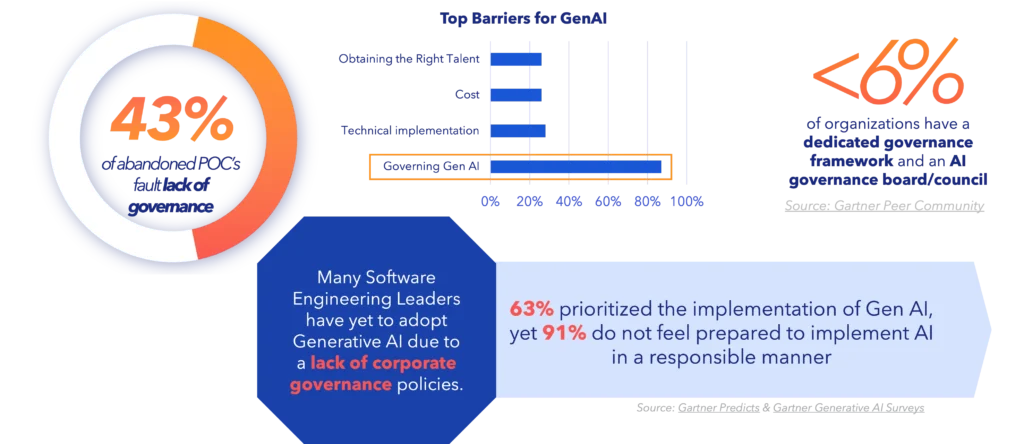

- A late 2024 survey of 2,500 C-suite executives and board directors found that most respondents do not believe their organizations are ready for broader AI deployment. In fact, only 3% consider their organizations “very ready,” while 41% say their organizations are “not ready.” (Governance of AI: A Critical Imperative for Today’s Boards)

- According to Gartner’s Peer survey, less than 6% of organizations have a dedicated governance framework and an AI governance board/council (Source: Gartner Peer Community)

- Based on Pariveda’s AI workshop data, 8 out of 10 express feelings of nervousness or fear about AI adoption

Our Perspective: Without AI Governance, the Path to Production is blocked

Given these data points, it’s no surprise that well-intentioned AI champions face hurdles—but with the right governance and strategy, these roadblocks can be overcome.

Why governance in AI is a critical first step

When everyone is pushing for speed, it might feel counterintuitive to slow down and introduce governance. But if the root cause of the slowdown is fear, uncertainty, and doubt, then a lightweight governance framework is precisely what organizations (and executives) need to move faster with confidence.

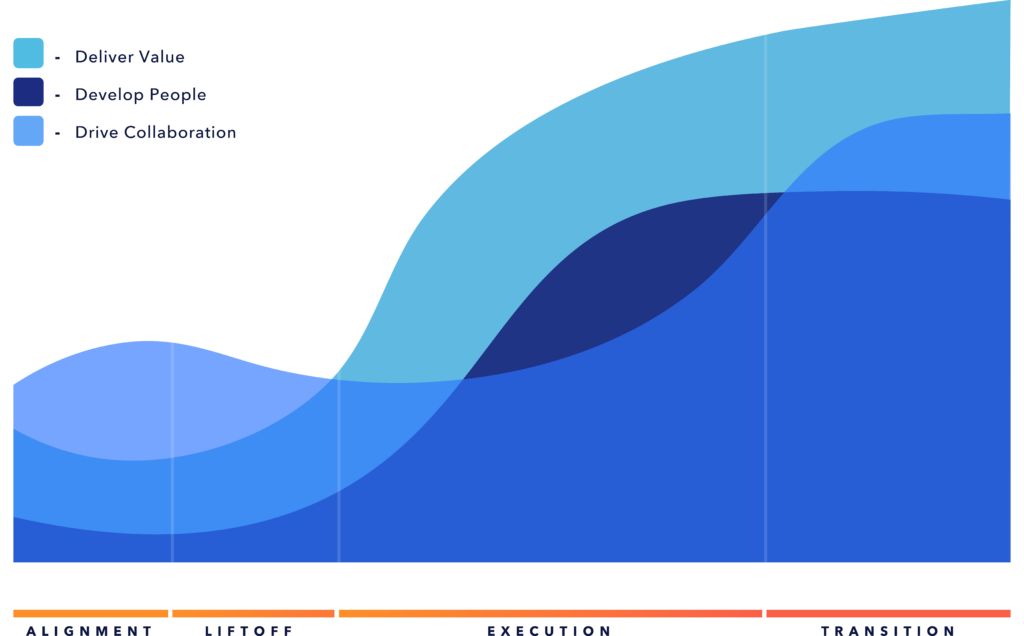

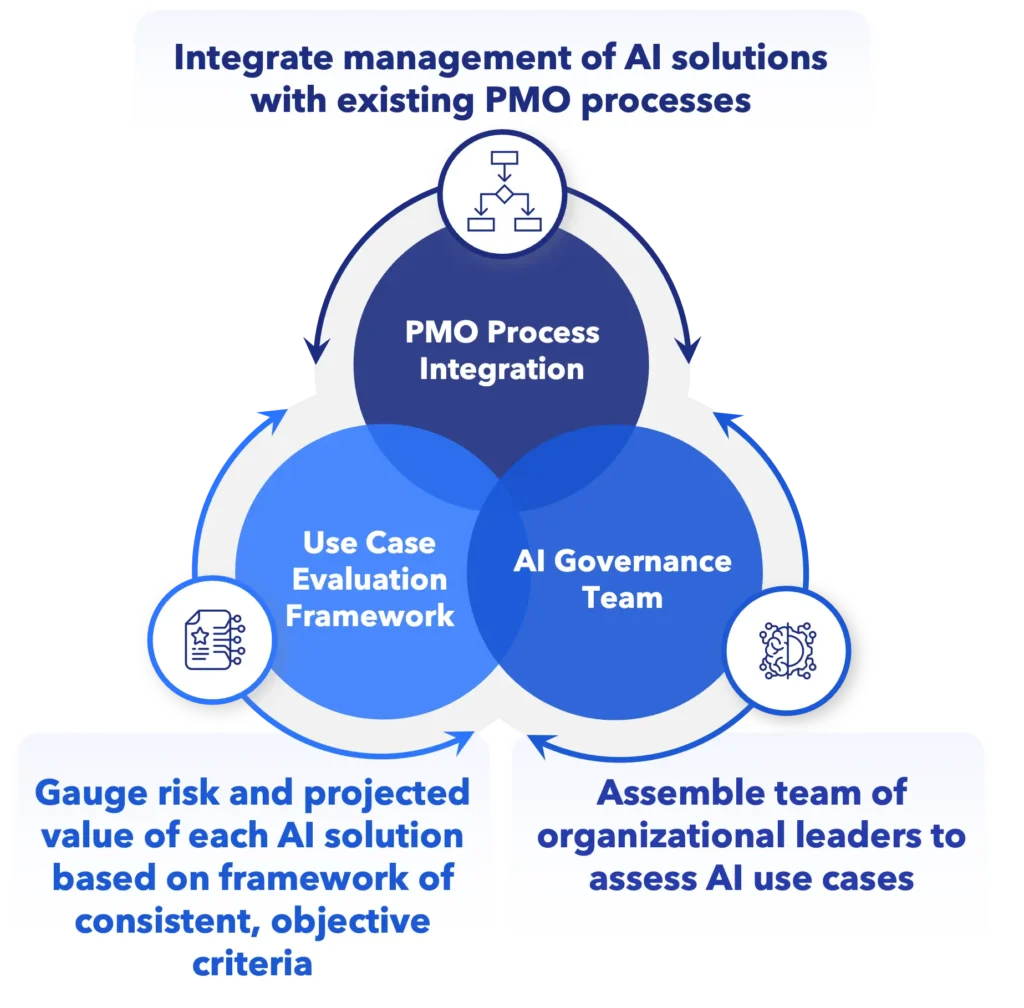

In our experience working with clients across industries, we have found the following three components of a lightweight governance process critical for success:

- Cross-functional AI governance team: Bring together legal, cyber, data, enterprise risk, functional units, IT and the PMO to proactively identify, manage and mitigate risk.

- AI intake and use case qualification: Help executives prioritize AI initiatives by weighing value, risk, and feasibility.

- PMO process integration: Establish AI-specific checkpoints throughout design, development, rollout, and ongoing monitoring.

Let’s explore these in more detail.

Cross-functional AI governance team

The first step is to assemble a cross-functional team (legal, cyber, data, enterprise risk, etc.) to set the vision and strategy for AI as well as develop a governance framework to enforce trustworthy AI.

This team will provide AI risk mitigation and governance oversight; identifying, managing, and mitigating risk to protect the value of AI for the organization. This team will also stay up to date on AI compliance and legislation to ensure AI compliance for the organization.

It is important to have the right people on the governance team while also staying small and nimble to ensure the process remains efficient and manageable.

Best practices for setting up an AI governance team:

- Create an AI Governance vision and strategy

- Establish AI policies, standards, and guiding principles

- Activate an AI Governance Operating Model (meeting cadences, example agendas, purpose, etc.)

- Define roles and responsibilities (RACSI)

AI intake and use case qualification

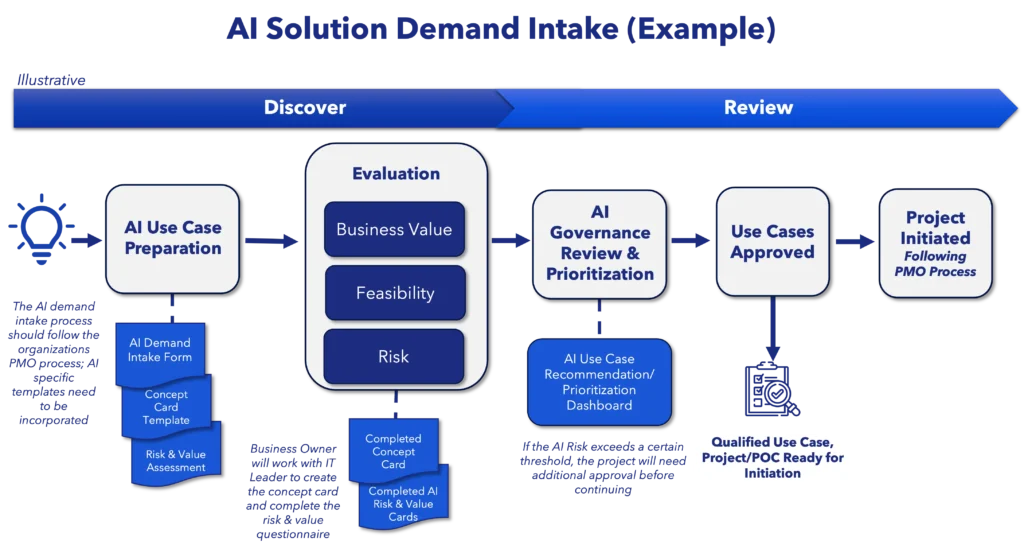

The second step is to implement an intake process that prioritizes speed over perfection. Organizations need a way to rapidly ideate, assess, and advance use cases into POCs, without fearing failure.

AI governance must balance value, feasibility, and risk as equally weighted indicators. Each organization will assess these differently, but these factors must be front and center.

Best practices for a lightweight AI intake process:

- Use a simple concept card to outline opportunity, value, feasibility, and risk.

- Implement a common AI risk questionnaire as part of intake.

- Establish an AI governance review board (at least initially) to ensure balanced risk and value assessment.

Ideally, integrate AI intake with existing PMO processes, though some companies may start separately.

Below is an example of an AI-focused intake process.

PMO process integration

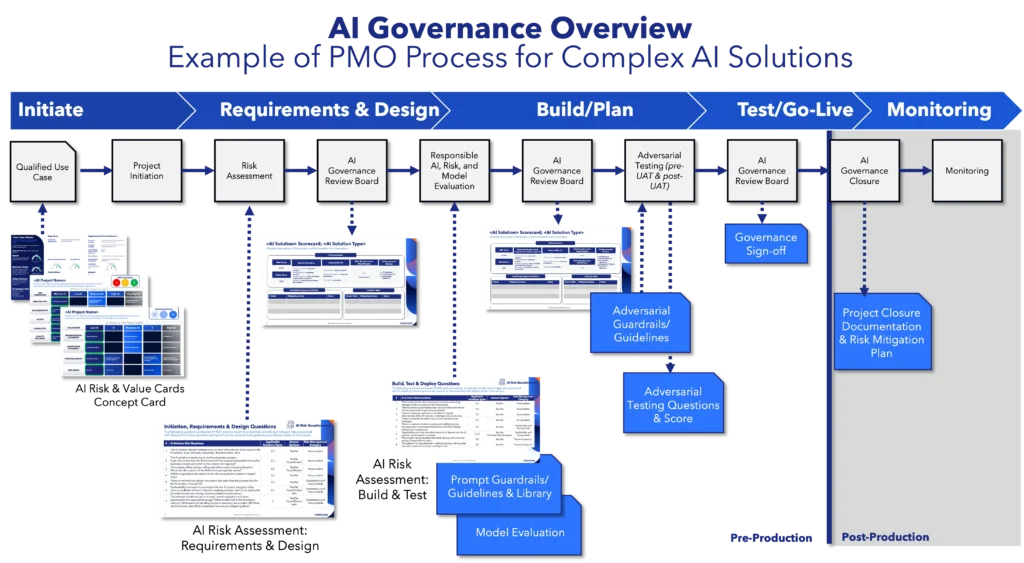

The third pillar of lightweight governance involves including AI-specific checkpoints throughout design and development. If the intake process is about choosing the right investments, this is about executing them responsibly.

Most companies already have a design and development process—but these processes are inadequate for AI. Fortunately, they can often be modified by adding a few lightweight AI-specific safeguards.

Best practices for AI governance in development:

- During requirements and design – Conduct a deeper risk assessment, reviewed by the AI governance team before moving to build.

- At the start of development – Conduct a prompt assessment, define prompt guardrails, and build a prompt library.

- During testing – Implement adversarial testing to assess AI vulnerabilities.

- Before go-live – Complete a final governance sign-off based on the evolved risk profile.

Below is an example of how AI-specific risk measures can be incorporated into a standard development process.

A checklist for lightweight governance

Before getting frustrated about AI initiatives stalling, take a moment to assess whether your lightweight governance framework is in place. If you can’t confidently say “yes” to the following, it may be time to slow down to speed up.

- Do you have a structured way to gather AI ideas?

- Do you use an AI-specific method for assessing risk vs. value?

- Have you determined how you will measure AI project success?

- Have you incorporated AI-specific risk assessments into requirements gathering?

- Are your designers and developers following AI-specific best practices?

- Have you integrated AI considerations into your testing strategy?

- Do you have a go-live checklist that includes AI-specific considerations?

By implementing lightweight governance, organizations can move faster—not by rushing, but by reducing uncertainty and creating a clear path forward.

How Pariveda can help

At Pariveda, we help organizations move forward with confidence by implementing lightweight governance that balances speed, risk, and value. Our value discovery workshops ensure that AI initiatives align with strategic goals, while our approach to governance keeps risk management at the forefront of every decision. If your AI initiatives are stuck or you need guidance on AI governance, reach out to Pariveda—we’re here to help you turn ideas into impact.