As AI transforms how organizations interact with information, the need to unlock insights from unstructured data is becoming increasingly relevant. Retrieval-Augmented Generation (RAG) has emerged as a powerful technique to address this challenge, enhancing language models by combining them with external knowledge retrieval to generate more accurate, contextualized responses. When embedded into a modern data platform, RAG enables enterprises to bridge the gap between structured and unstructured data, offering a new layer of interaction through natural language interfaces. This article explores the framing of RAG as a database, the role of supporting technologies like vector databases and large language models, and the implications of incorporating RAG-based systems into enterprise data architectures to deliver smarter, more intuitive data experiences.

Core components of a RAG solution

A RAG solution is comprised of several components that allow an organization to unlock the hidden value in their data. These components include the RAG process, vector databases, and the transformer contained within some large language models.

Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation (RAG) is a technique that enhances the capabilities of language models by combining them with external knowledge sources. Instead of relying solely on pre-trained parameters, RAG dynamically retrieves relevant documents or data from a knowledge base and uses this information to generate more accurate and contextually informed responses. This approach is valuable when dealing with complex queries or niche topics that may not be well-represented in a model’s training data. By grounding responses in more relevant and real-time information, RAG increases the factual accuracy and relevance of generated content.

Despite its advantages, RAG comes with several challenges. One major drawback is its dependence on the quality and relevance of the retrieved documents; if the retrieval step fails or brings in misleading content, the final output can be flawed. Additionally, RAG systems can be computationally intensive, as they involve both retrieval and generation processes, which may require specialized infrastructure. Another limitation is that RAG does not inherently verify or reason about the correctness of retrieved content—it simply assumes the retrieved information is reliable and integrates it into its output.

Corrective RAG addresses some of these limitations. It introduces mechanisms to evaluate and adjust the retrieved documents before generation, such as filtering out irrelevant or low-quality data, or using feedback loops to refine the retrieval process. This corrective step helps prevent the propagation of errors and improves the alignment between the retrieved knowledge and the user query. As a result, Corrective RAG makes the overall system more robust, transparent, and trustworthy, enhancing the quality and reliability of generated responses in real-world applications.

Vector databases

Vector databases are specialized data stores designed to efficiently handle high-dimensional vector data, which is essential for tasks involving similarity search and semantic understanding. Unlike traditional databases that rely on structured, relational data, vector databases enable rapid retrieval based on proximity in vector space, making them ideal for applications in machine learning, recommendation systems, image recognition, and natural language processing. These databases use indexing techniques like approximate nearest neighbor (ANN) search to scale high-performance similarity queries across large datasets, improving the relevance of search results based on meaning rather than exact matches.

In RAG systems, vector databases play a critical role in enhancing the accuracy and contextuality of generated responses. When a user poses a query, the RAG system embeds it into a vector and uses a vector database to retrieve semantically similar documents or passages from a knowledge base. These results are then used as context for a language model to generate informed responses. This approach enables the model to go beyond its static training data, effectively accessing domain-specific information, which is particularly useful in enterprise use cases or dynamic content environments.

Transformer

Bruce Ballengee, founder and former CEO of Pariveda Solutions, observed in conversation that the Transformer, used in LLMs, looked like an index and query engine from a database. This observation is in line with the overall view of RAG as a Database and warrants a deeper understanding of the Transformer architecture used in LLMs.

A transformer is a type of neural network architecture that underpins modern large language models (LLMs). Introduced in 2017, the transformer architecture revolutionized natural language processing (NLP) by moving away from sequential processing models [1]. At its core, the transformer uses a mechanism called self-attention, which allows the model to evaluate and weigh the importance of different words in a sequence, regardless of their position. This enables it to understand relationships between words across long distances in a sentence or paragraph, offering a more holistic grasp of context.

One of the key strengths of transformers is their scalability and parallelization. Unlike previous models that processed input sequentially (limiting training speed and performance), transformers process all tokens simultaneously, making training on large datasets much more efficient [1]. This efficiency, combined with the ability to learn complex patterns in data, allows transformers to excel in a wide range of NLP tasks. These include language translation, question answering, text summarization, and sentiment analysis. Their architecture also allows for pretraining on vast amounts of unlabelled text, followed by fine-tuning on task-specific data, making them incredibly versatile.

Transformers are particularly strong at capturing the nuances of human language, with examples such as word ambiguity and context-dependent meanings. They can generate contextually relevant text, complete sentences or documents, and even “reason” through chains of logic when prompted [2]. Beyond language, transformers have been adapted for use in fields like vision, biology, and reinforcement learning [3]. Their ability to model relationships between elements in a sequence (not just words) means they’re powerful tools for a broad range of sequence modeling problems.

Why data quality matters for RAG

Data is at the heart of a RAG solution. RAG represents a mechanism to make use of an organization’s private data leveraging the power of a transformer-based large language model.

Structured vs. unstructured data: Key differences

Structured and unstructured data differ primarily in how they are organized and stored. Structured data is highly organized and formatted in a way that is easily searchable by traditional database technology and downstream BI tools. It resides in rows and columns, with clearly defined data types and schema, examples include records in a CRM, sales transactions in spreadsheets, or sensor data from IoT devices. Because of its consistency and predictability, structured data is easy to query and incorporate into large scale data platforms with BI reporting tools.

In contrast, unstructured data lacks a predefined format or schema, making it more complex to process and analyze. This includes, but is not limited to, text/pdf documents, emails, audio files, images, videos, social media posts, and web pages. Unstructured data represents most of the information generated today, and while it is rich in context and insight, it requires specialized tools such as natural language processing (NLP), computer vision, or machine learning to extract meaningful patterns or semantic understanding. As organizations seek to leverage this wealth of unstructured information, technologies like vector databases and large language models are increasingly being used to facilitate search, summarization, and analysis.

Enterprise use cases for unstructured data and RAG

Enterprise use cases leveraging unstructured data are diverse, and RAG is particularly well-suited to address many of them by enhancing the ability of AI systems to understand and leverage domain-specific information. One major area is enterprise knowledge management. Organizations often store vast amounts of documentation, internal wikis, PDFs, meeting notes, emails, and policies that are difficult to search and process using standard tools. A RAG system can ingest this data, index them as vector embeddings, and enable interactions using natural language questions and receive contextualized responses based on the latest documents.

Another impactful use case is customer support and service automation. Companies often deal with large volumes of support tickets, chat logs, user manuals, and FAQs, much of it unstructured. A RAG-based virtual assistant can retrieve relevant past conversations or documents to generate accurate, personalized answers, reducing the burden on human agents and improving response times. Additional applications include legal and compliance research (retrieving relevant clauses from contracts or regulations), healthcare support (finding information in clinical notes or research papers), and sales enablement (surfacing relevant case studies or pitch materials during client interactions). Across all these domains, RAG enhances the utility of unstructured data by making it accessible and actionable through natural language interaction.

Integrating RAG into your enterprise data platform

Introducing RAG into a data platform opens the opportunity to include some or all the Enterprise use cases. There are products on the market that are already trying to solve the enterprise knowledge management case. With varying degrees of success, products like Glean and Copilot from Microsoft are both aiming to capture and index, in a vector database, all the information stored in documents throughout the organization. In addition to capturing and vectorizing the data, these products from a Natural Language Interface for querying/searching through the data to unlock and leverage the shared knowledge of the organization.

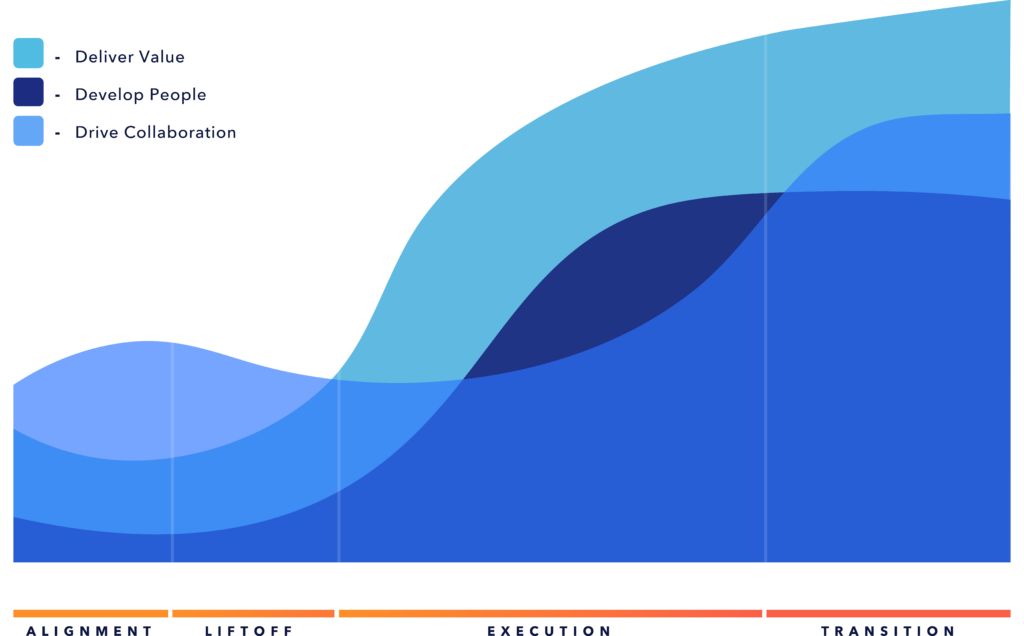

Pariveda Solutions’ Modern Data Enterprise (MDE) is a blueprint for a modern data platform designed to unlock the value of an organization’s data. It is designed to build and grow organically with the business by being rabidly use case driven and always mapping data products to value. Until recently, MDE had been focused on the ingestion and processing of structured data. The diagram below features an illustrative data pipeline that ingests unstructured data that chunks the unstructured data into smaller pieces to make them more context relevant for future queries and adds the chunks to the vector database.

This approach to incorporating unstructured data into a data platform enables the ability to have a “conversation with the data”. Leveraging a LLM as part of the conversational interface to consume the vectorized data, users can have context-based conversations with the data to explore and gain a detailed and holistic view of the data and the information contained. If the work-to-be-done with the vectorized data is less tolerant to inaccuracy or represents additional risk to the organization, such as a public facing interface, incorporating a Corrective RAG process to the conversational interface will reduce the risk of LLM hallucination or inaccurate or false data returned to the user.

A drawback of this data pipeline is that it results in the isolation of the vectorized data from the rest of the structured data in the data lake. In this case the data is less usable in the context of the total data lake with a complex and potentially error prone orchestration required to merge the structured and unstructured data.

A second data pipeline for working with unstructured data is to provide the ability to structure the unstructured data at the time of ingestion. This type of data pipeline is not a RAG based solution but introduces an additional mechanism to leverage generative AI in an enterprise data platform. Using a detailed prompt fed to the LLM, the model will be able to analyze the piece of data passed to the pipeline and converted into a structured format, such as a JSON document.

Implementing this kind of data pipeline allows the converted data to be included as part of other enterprise use cases, such as enterprise reporting.

Ultimately, the answer is not a this or that, but rather allow the use cases to guide the decision as to which pipeline to use. Each pipeline satisfies a different user experience. They both can coexist as part of a data platform and can provide a robust end-user experience and data management strategy.

Getting started: Five steps to implement RAG effectively

Listed are five steps that can be taken to unlock the value of using RAG as part of an organization’s overall data strategy.

Inventory and prioritize unstructured data sources

As with any data project, start by identifying and cataloging the types of unstructured data sources that exist across the organization—documents, PDFs, internal wikis, chat logs, support tickets, etc. Prioritize sources based on:

- Frequency of use in decision-making or customer support

- Relevance to prioritized use cases

- Ease of access and existing storage formats

To ensure data quality of the sources, a clear picture of where the unstructured knowledge lives and the level of quality to make it usable via RAG pipelines.

Implement a vector database and document chunking pipeline

Set up a vector database (e.g., Pinecone, FAISS, Weaviate) and build a pipeline to:

- Ingest unstructured data

- Chunk it into semantically meaningful pieces

- Store them as vectors for retrieval

- Embed those chunks using transformer-based large language models

Implementing the Vector database and chunking pipeline creates the foundational architecture RAG requires to operate and enables similarity-based querying.

Deploy a RAG-based interface for internal use cases

Choose a high-impact internal use case. The list below names a subset of potential high-value use cases.

- Enterprise knowledge search

- Customer support response generation

- Legal/compliance research

Deploy a RAG application enabling users to query the indexed data using natural language, and receive contextualized answers. A focused pilot validates value quickly, builds confidence, and generates feedback for broader rollout.

Incorporate corrective RAG to improve output reliability

As the use case matures—especially if it involves external-facing outputs or high risk (e.g., legal, compliance, public-facing tools)—add Corrective RAG mechanisms:

- Integrate data governance policies and regulatory compliance to results

- Filter low-quality or irrelevant results

- Use user feedback loops to refine retrieval

- Integrate fact-checking or summarization steps

Corrective RAG reduces hallucination risk and builds trust in AI-generated responses.

Design dual data pipelines to support coexistence

Support two complementary workflows:

- RAG pipeline for conversational interfaces and exploration

- LLM-to-structured pipeline for transforming unstructured inputs into structured formats (e.g., JSON) that integrate with existing BI/reporting tools

This ensures unstructured data is not isolated from structured analytics and provides flexibility to choose the right pipeline per use case.

The future of RAG in enterprise data strategy

Incorporating Retrieval-Augmented Generation (RAG) into the architecture of modern data platforms represents a transformative shift in how organizations can interact with and derive value from their unstructured data. By leveraging vector databases and the power of large language models, enterprises can move beyond their current set of use cases and engage in dynamic, conversational exploration of their internal knowledge. Corrective RAG further strengthens this interaction by improving the reliability and accuracy of generated responses, making AI-driven insights more trustworthy for high-stakes use cases. While challenges remain, flexible pipeline architecture allows organizations to adapt their approach based on the work to be done. Ultimately, treating RAG like a database unlocks a new paradigm in enterprise intelligence, where data is not only retrieved, but contextualized, making it truly actionable.

Ready to explore how RAG can elevate your enterprise data strategy? Get in touch to start building a smarter, more connected data platform.

References

- Vaswani, A., et al. (2017). Attention is All You Need.

- Parisotto, E., et al. (2020). Stabilizing Transformers for Reinforcement Learning.

- Brown, T., et al. (2020). Language Models are Few-Shot Learners.