The hype surrounding artificial intelligence (AI) in healthcare is significant. The market is projected to reach a value of about $36.1 billion by 2025 — yep, you read that right — with a compound annual growth rate of over 50%.

That number is a reflection of transformational potential. Practitioners have an easy time imagining the hazy yet exciting future of AI-enhanced healthcare. Adoption, however, is still a significant barrier.

In practice, there seems to be a gap between theories surrounding digital transformation in healthcare and its applications in addressing day-to-day patient needs.

Starting small and implementing new technology

As opposed to other sectors where AI adoption has been swifter, healthcare is comparatively hampered by operational challenges, regulatory friction, and a lack of follow-through in digital transformation. Case in point: A cohesive data strategy is the first step in implementing analytics (and should be an early step in any digital transformation), but 56% of hospitals don’t even have a data-governance strategy.

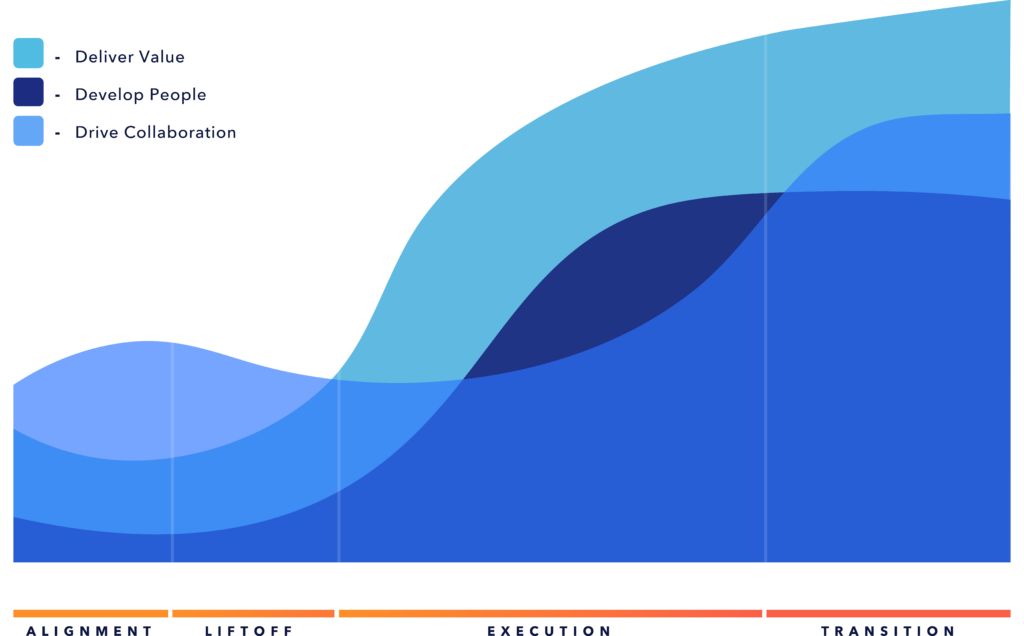

When we hear about the potential scope of digital transformation in the healthcare industry, the image can be so vast that it’s difficult to see the entire journey at once. Consequently, spotting the first step to implementation can be difficult for providers.

The solution is to adopt new technology and AI in smaller increments. If providers can start with incremental changes in their digital lives — using analytics to predict scheduling, for example — then doctors will become used to working with this technology in a safe environment. Eventually, this will empower them to make better care decisions informed by new digital tools.

First, though, providers need to trust AI.

AI’s future applications in healthcare

Right now, there’s often a fear of completely trusting data insights. In one notable example, a Harvard professor compared machine learning in healthcare to asbestos during a recent precision medicine conference. Whether the claim is founded, it gives us a good idea of just how much some people mistrust emerging technologies.

If a computer suggests that a patient has a high likelihood of relapsing, for example, the doctor should be able to view that suggestion with confidence and make care decisions accordingly. Without that trust, all the advanced technology in the world can’t actually improve outcomes.

It’s helpful to recognize and reinforce that AI in healthcare should focus on support and augmentation rather than replacing doctors. In fact, AI innovators tell providers that their priority is automating the mundane. They’re not trying to fly healthcare to the moon — they want to make small yet meaningful impacts to aid treatment.

Building on that trust, doctors must be able to understand data on a human level. If data from a monitoring device shows that a patient’s blood pressure spiked the last three times she left the hospital and returned to work, the doctor can confidently suggest that she needs to wait a little longer before returning to the office.

To reach that point, doctors need to be able to integrate expertise regarding their patients (and those patients’ habits) with data that makes that expertise more effective in driving outcomes. The more complete and integrated predictive data is, the more doctors will be able to bring increased clarity and calm to the patient’s experience.

When implementing new technology in healthcare, providers must start by thinking small. Trust data to support medical staff in direct, tangible ways, and from there, build up to more transformational deployments — no hype necessary.